When I delivered my WE25 session, “Practice Safe AI: Protecting Privacy and Preventing Bias” on Responsible AI Use, the most revealing insight arrived afterward. Both new and experienced AI users told me the material felt immediately relevant because, despite their comfort with the tools, they were still unsure how to use them safely. They knew how to generate output, refine prompts, and incorporate AI into their workflow, yet they had little confidence in the practices required to ensure that their use of AI did not create risks for themselves or their organizations.

AI has entered daily professional work at remarkable speed, outpacing the norms and guardrails that typically help people use emerging technologies responsibly. Today in the United States, there are no comprehensive federal protections governing everyday use of AI, and organizational policies vary widely in scope and clarity. As a result, most people make real-time decisions with few external anchors. This blog builds on the work that shaped my WE25 preparation (slides available here), but it extends well beyond that moment. It offers a practical way to navigate AI use in environments where adoption is high but safety expectations are inconsistent, and where trust can be strengthened or eroded by the choices individuals make.

Three Things Every Professional Must Protect

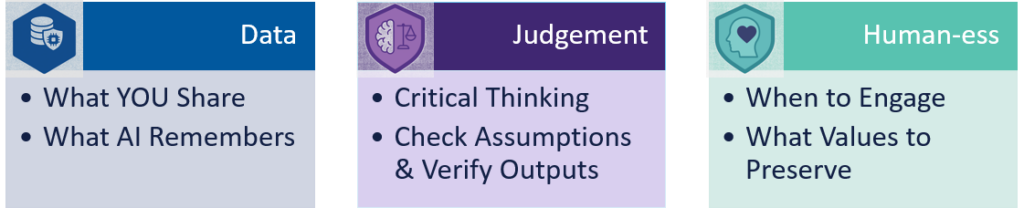

I believe that Responsible AI Use begins with an awareness of what is at stake in day-to-day practice. Three elements require consistent attention because they shape the quality and consequences of our work: what I refer to as data, judgment, and human-ness.

Protect your data

Much of the risk associated with AI stems from what people choose to share, not from the underlying model. Workflows often involve pasting examples, drafts, or summaries that contain far more context than is needed, including information that should never be placed into external systems. In the absence of strong external guardrails, individuals must take ownership of protective actions such as redacting identifiable information, adjusting tool settings, and limiting shared detail to what is essential for the task.

Protect your judgment

AI can improve clarity and accelerate analysis, but it also introduces a dependency risk when its outputs are accepted without scrutiny. Fluent language can disguise weak reasoning or missing assumptions. As Ethan Mollick notes in his widely read article Against Brain Damage, AI can amplify thinking or diminish it. Protecting judgment means checking claims, comparing alternatives, and ensuring that your own perspective shapes the final work, rather than assuming correctness simply because the text is polished.

Protect your human-ness

As AI becomes more capable, it can unintentionally shift how people value their own contributions. The Artificiality Institute’s research on adaptation states, introduced in The Chronicle, illustrates how quickly experimentation can turn into reliance. Protecting human-ness involves preserving what is uniquely human in the workflow, such as contextual insight, empathy, ethical reasoning, and the meaningful synthesis of ideas. These elements cannot be delegated without weakening the depth and integrity of the final work.

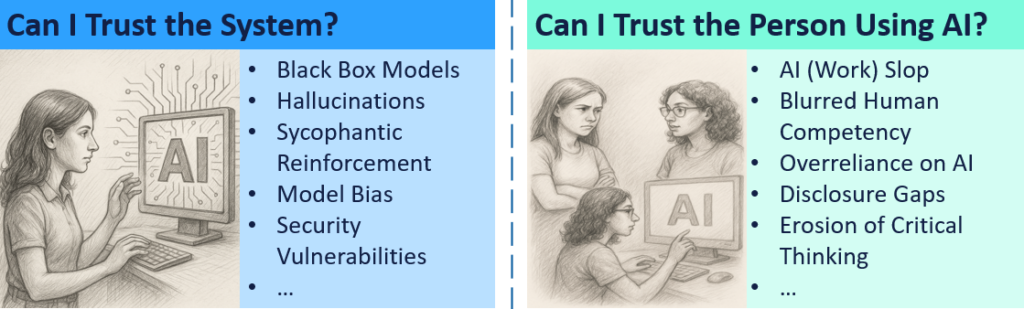

The New Trust Gap

Most discussions about AI focus on whether the system can be trusted. Accuracy, bias, and opacity matter, and they will continue to demand attention as systems evolve. Yet another question has become equally important: can the human using the AI be trusted?

This trust gap emerges from the behaviors surrounding AI use, not from the technology itself. Colleagues want to know whether the person sharing an AI assisted output has verified the information, protected sensitive context, used sound judgment, and disclosed the extent to which the tool shaped the result. Trust falters when polished text is shared without review, when sensitive details appear in prompts, or when AI generated content is blended with human work without clarity about the underlying reasoning.

Through my teaching and advisory work I have seen a consistent pattern. Many professionals are skilled at generating output but lack reliable practices for evaluating when AI strengthens their work and when it introduces risk. Their concern is not about model performance but about meeting the expectations of their roles. Credibility, in this context, depends on the human practice surrounding AI use.

Four Disciplines for Safe, Confident AI Use

The Anthropic 4D model provides a useful lens for responsible adoption. Their full framework, AI Fluency: Frameworks and Foundations, outlines four disciplines that help narrow the trust gap and guide thoughtful collaboration with AI.

- Delegate wisely

- Determine which parts of a task benefit from AI support and which require your direct expertise. High judgment work that involves interpretation or sensitive decision making should remain human led.

- Describe with intention

- Provide the model with the context it needs without including unnecessary detail. Purpose, constraints, and audience matter. Specific names usually do not.

- Discern actively

- Treat AI as a collaborator whose work must be examined. Ask for alternatives, check assumptions, and make sure your perspective shapes the final output.

- Practice Diligence

- Review the final product, note how AI contributed, and monitor how outputs change as models evolve. Diligence ensures consistency even as tools shift under the surface.

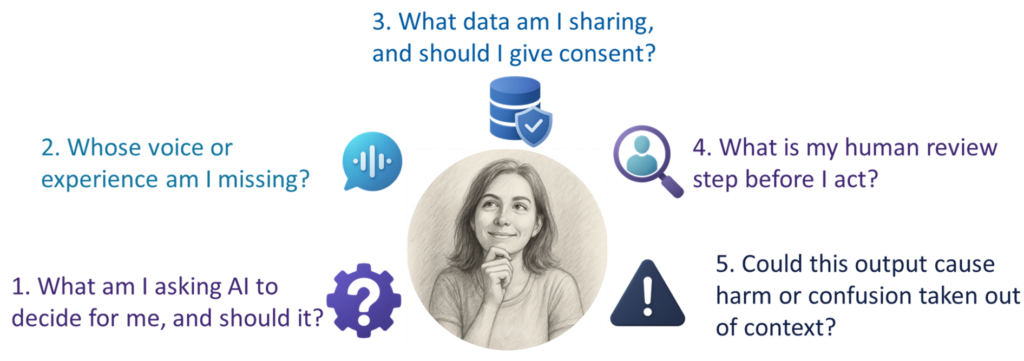

Five Boundary Questions That Strengthen Trust

Before sharing AI assisted work, these questions help ensure that the output is appropriate, accurate, and aligned with the expectations of your role:

- What decision am I using AI to influence?

- Who is missing or unrepresented in this output?

- Am I exposing information I would not share publicly?

- How will I verify and adapt this before sharing?

- What confusion or harm could arise if this is misunderstood?

These questions bring judgment back into the process at the moment it is most needed.

Building Safer AI Habits

Responsible AI use improves when the habits around the tool are intentional.

- Plan what to delegate by breaking tasks into parts and deciding which elements require your expertise.

- Timebox the collaboration so AI remains helpful rather than distracting.

- Redact identifiable information and review privacy settings regularly.

- Verify every output to ensure the reasoning holds.

- Watch for model changes by occasionally testing earlier prompts.

- Reflect on delegation decisions so intuition grows stronger over time.

AI can assist with these habits when used carefully. It can outline subtasks for delegation, suggest timeboxed plans, generate redaction checklists using synthetic examples, or critique anonymized drafts to surface gaps and assumptions. The goal is not to outsource discipline but to scaffold it.

Closing Reflection

AI will continue to expand in capability, and the uneven guardrails around everyday use mean that individual practice plays a significant role in shaping its impact. The habits described here offer a practical foundation for safe and confident use, helping AI strengthen the quality of your work rather than obscuring it. Responsible use is about elevating how you work, ensuring that your decisions, your expertise, and your uniquely human contributions remain at the center of the process.

Discover more from DEEPLY Product

Subscribe to get the latest posts sent to your email.